AI governance platforms are software solutions that enable teams to monitor, control, and explain the performance of AI models. They track performance, detect bias and drift, enforce policies, and generate audit trails. But they’re not all the same. Some govern ML models, while others, like Superblocks, govern how teams build and use AI inside internal apps.

In this article, we’ll cover:

- Detailed reviews of each platform’s features, pros, cons, and pricing

- How we evaluated these tools

- How to choose the right platform for your needs

Let’s start with a quick comparison.

10 best AI governance platforms

Before we go into the details of each platform, let’s quickly compare them side by side:

1. Superblocks

What it does: Superblocks enables enterprises to scale internal app development across IT, business, and engineering teams while maintaining full control with centralized governance. Teams can build with AI, the visual editor, or raw code in their preferred IDE. Superblocks provides centralized governance and visibility across all internal tooling built on the platform, regardless of the method they use.

Who it's for: Operationally-heavy companies that want to democratize development across semi-technical and technical teams without sacrificing governance, compliance, or standardization.

Key features

- AI with enterprise guardrails: Clark generates applications with full awareness of your security preferences and design systems. You can also define rules to sanitize prompts and validate AI-generated code before deployment.

- Flexible development modes: You can build with AI, a visual editor, or code all within the same platform. The visual editor gives you full WYSIWYG capabilities. You can export applications as standard code for further customization in your preferred IDE.

- Central governance controls: You get RBAC, SSO integration, and audit logging through a single admin interface. You can also centrally manage your secrets by integrating with tools like HashiCorp Vault.

- On-prem deployments: The lightweight on-premise agent keeps your data in your network. Your control plane still lives on Superblocks. You avoid the overhead of full local deployments.

- Extensive integrations: Superblocks integrates with a broad range of APIs and databases. This includes REST, gRPC, GraphQL, and OpenAPI. It also connects to your existing software development lifecycle processes, including Git workflows and CI/CD pipelines.

Pros

- Eliminates the typical internal tool backlog: Technical and semi-technical users can build end-to-end applications quickly while engineers focus on higher-priority projects.

- Enterprise-grade security built in: It comes with built-in support for SSO, access controls, and audit logs. You don’t worry about an internal app inadvertently violating compliance.

- The platform eliminates vendor lock-in: You can export applications as standard React code and host them independently. You’re also not locked in development-wise. Teams can build at the level of abstraction they prefer, whether that's AI, visually, or raw code.

Cons

- Tailored pricing: Teams may find the pricing unclear because Superblocks customizes plans for each organization’s requirements.

- It works at the app level: Superblocks governs the applications built with AI and doesn’t offer model governance features like drift monitoring or model versioning.

Pricing

Superblocks offers custom pricing tailored to your organization. The pricing will depend on the number of builders, end users, and deployment model you choose.

Bottom line

If your AI governance needs are security, access, and auditability for apps that use AI models, Superblocks is the platform to beat. It’s a strong fit for teams that want to move fast with AI while staying in control of what users build and deploy.

2. IBM Watson OpenScale

What it does: IBM Watson OpenScale monitors and governs AI models in production. It continuously tracks your AI models’ performance, detects issues such as bias or drift, explains model decisions, and even suggests corrective actions.

Who it’s for: Large organizations and enterprises that need assurance that their deployed models are fair, explainable, and performing within limits.

Key features

- Bias detection and mitigation: OpenScale monitors your models for unfair outcomes across protected groups like gender, age, and race. You can set fairness thresholds, and it will alert you when bias crosses acceptable limits.

- Drift and performance monitoring: The system tracks when your model accuracy drops or input data drifts from training patterns. It flags performance issues before they impact business decisions and supports feedback loops to trigger retraining.

- Explainability and transparency: OpenScale explains why models made specific decisions. It does this using feature importance breakdowns and what-if analysis. This supports your efforts to meet regulatory compliance requirements and provides transparency for stakeholders.

Pros

- Industry-leading bias and fairness tools: IBM has poured its AI Fairness research into OpenScale. It not only detects bias, but can actively help correct it.

- Multi-cloud and model-agnostic: You can monitor models from AWS, Azure, on-premises, and IBM platforms through one centralized dashboard.

- Strong compliance reporting: The tool automatically logs who deployed the model, dataset versions, bias metrics over time, and other metadata.

Cons

- Complex setup and IBM-centric stack: You will require significant IT effort and IBM infrastructure knowledge to deploy OpenScale, especially on-premises via Cloud Pak.

- IBM heavy: The platform integrates best with Watson and Cloud Pak for Data, even though it supports external models.

Pricing

IBM does not openly publish detailed pricing for OpenScale. You pay for each model the platform monitors. Costs vary by context, region, and volume.

Bottom line

Choose OpenScale if you already operate in IBM's data/AI ecosystem. However, it might be too complex and IBM-centric for small companies or those seeking a plug-and-play tool.

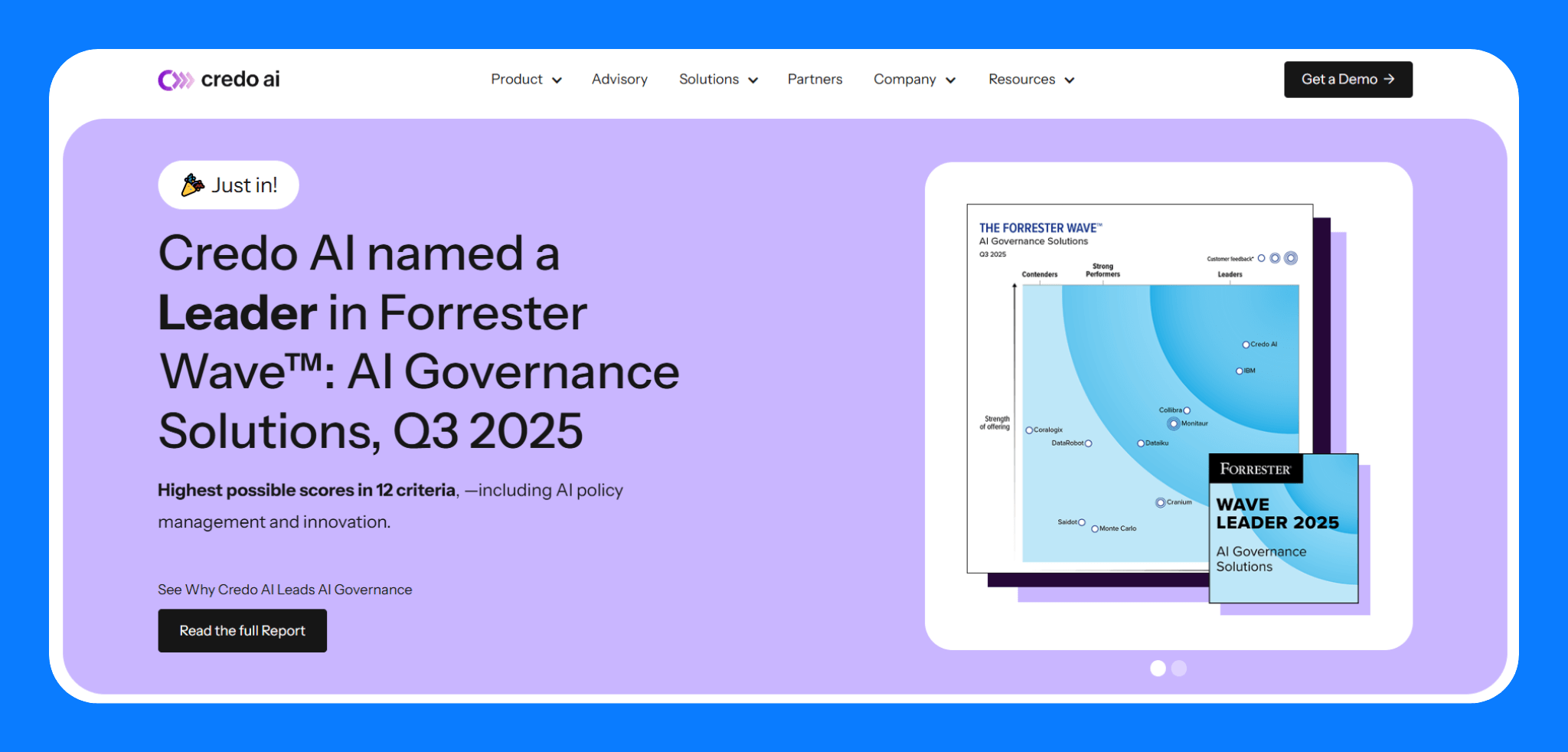

3. Credo AI

What it does: Credo AI gives teams a way to intake use cases, assess them against regulatory frameworks, and generate audit-ready documentation before systems get deployed.

Who it’s for: Large enterprises deploying or integrating LLMs, third-party AI services, or internal models where transparency and audit readiness are required.

Key features

- Policy packs: Credo includes ready-to-use templates aligned with regulations like the EU AI Act and NYC Local Law No. 144. You can apply these policy packs across your AI systems.

- Third-party model review: Credo AI simplifies risk reviews during procurement by providing a standardized way to collect and evaluate AI risk from vendors. All your vendors are consolidated in a dedicated portal.

- GenAI governance for LLM use cases: It helps teams document use case intent, manage model selection, and set appropriate disclosure requirements. It also recommends human-in-the-loop safeguards for higher-risk use cases.

Pros

- Credo supports real-world compliance with legally grounded AI governance frameworks.

- Credo tracks governance maturity over time with dashboards that show policy adoption, risk coverage, and audit readiness.

- It creates a common space for compliance officers, business units, and data scientists to collaborate on AI projects.

Cons

- It doesn’t include model monitoring, latency tracking, or drift detection.

- You might require workshops and input from various stakeholders to define your own policies or customize their policy packs.

Pricing

Credo AI pricing is available on request. There’s no free tier.

Bottom line

Credo’s value is in structured control points, automated policy recommendations, and a shared system of record for AI governance teams. It excels at turning regulations into workflows that drive responsible AI development at scale.

4. Azure Machine Learning (Azure ML)

What it does: Azure Machine Learning helps teams build, deploy, and govern AI models in production using Microsoft’s cloud-native MLOps tools.

Who it’s for: Enterprise teams that run AI workloads in Azure and need tight integration with cloud policy, networking, identity, and compliance systems.

Key features

- Policy‑based model control: Admins can block or allow specific foundation models using Azure Policies. This limits access to unapproved GenAI models across environments.

- Security and networking governance: Azure ML supports RBAC, private endpoints, and managed VNETs. Teams can secure data, APIs, and containers inside isolated networks.

- Responsible AI dashboard: Azure ML's unified toolkit includes model interpretability, fairness assessment, counterfactual analysis, and error analysis. You can launch this directly from Azure Jupyter notebooks or Studio UI.

- ML Ops with governance hooks: Teams can build pipelines with mandatory approval steps. They can require bias checks or human-in-the-loop reviews before deploying endpoints. Azure ML logs all audit data to support compliance workflows.

Pros

- Azure ML integrates responsible AI tooling directly into the ML development workflow.

- Strong integration with enterprise identity, networking, and security tools already in Azure.

- Built-in policy templates and audit automation reduce manual governance overhead.

Cons

- You're locked into Azure's ecosystem.

- May require dedicated Azure policy and security expertise to configure and maintain custom governance controls.

Pricing

Azure Machine Learning’s governance features use Azure usage-based pricing. You pay for the underlying compute and resources used. Enterprise support and audit-ready compliance features will incur additional licensing (e.g., Purview, Defender for Cloud, Azure Policy Manager).

Bottom line

Azure ML offers an integrated, policy-centric approach to AI governance. It’s particularly a good fit for companies that run production AI in the Azure stack.

5. Fiddler AI

What it does: Fiddler AI provides observability, security, and explainability for both ML and LLM-based applications. It helps teams monitor model behavior and enforce guardrails across production pipelines.

Who it's for: Teams deploying models at scale who need to actively monitor, diagnose, and control model behavior in production.

Key features

- Trust scores for LLM outputs: Fiddler uses its proprietary Trust Models to generate calibrated trust metrics like hallucination, faithfulness, and safety.

- Real-time guardrails enforcement: It blocks unsafe or non-compliant LLM responses before they reach users.

- Deep observability across ML and LLMs: Fiddler monitors model drift, fairness, performance, hallucination, and bias for both traditional and generative models, within a single observability layer

Pros

- Fiddler delivers unified observability for both ML and LLM systems in one platform.

- The platform offers SaaS, VPC, or GovCloud deployment with SSO integration and granular access controls

- It enables fairness monitoring and bias mitigation with embedded reporting for compliance.

Cons

- Many features and terminology require time to master for teams new to ML monitoring.

- Lack of transparent pricing can be a downside for smaller teams trying to evaluate costs

Pricing

Fiddler AI offers Lite, Business, and Premium tiers. It’s all quote-based. The Lite plan supports up to 10 models, 10 user seats, and 500 tracked features. Most capabilities in the Business and Premium tiers are custom or unlimited.

Bottom line

Fiddler AI is built for teams that already deploy models and want visibility, control, and trust metrics.

6. DataRobot

What it does: DataRobot offers automated machine learning with built-in governance. It handles everything from model building and documentation to deployment, monitoring, and risk mitigation.

Who it's for: Enterprise AI, MLOps, and compliance teams that need unified governance and observability across internal, third‑party, or multi-cloud models.

Key features

- Automated model documentation across all models: DataRobot generates compliance reports automatically. It does this even for models you’ve built or deployed outside its platform.

- Enterprise-grade auditability and traceability: The platform tracks feature, model, and lineage metadata, plus deployment changes, versioning, and prediction logs.

- LLM evaluation and real-time intervention: DataRobot applies synthetic and real-data tests on generative AI models. This detects risk, hallucination, or compliance issues during evaluation and production.

Pros

- DataRobot unifies predictive and generative AI governance within a single platform.

- It balances speed and governance by letting teams deploy models fast while staying compliant.

- It supports third-party or custom models via import APIs, making it flexible for hybrid tooling environments.

Cons

- You get limited flexibility outside its ecosystem. It works best when used end-to-end.

- It requires platform onboarding and configuration.

Pricing

DataRobot doesn't publish pricing, but it has a free trial. You must request quotes via a demo or sales contact.

Bottom line

DataRobot is fast and accessible for teams without strong ML expertise. It lets non-coders build models while ensuring proper vetting, documentation, and production tracking.

7. SolasAI

What it does: SolasAI detects, quantifies, and mitigates algorithmic bias and disparity in machine learning models. It integrates with existing pipelines to generate fairness insights and alternative models without disrupting model workflows.

Who it's for: Compliance officers, data scientists, and regulated enterprises that need to proactively identify bias, ensure fairness, and meet regulatory scrutiny.

Key features

- Detects and quantifies disparities: SolasAI analyzes model outputs across sensitive groups and identifies where bias exists.

- Illuminates disparity drivers: It explains which features or cohorts contribute to value and discriminatory outcomes.

- Generates fairer alternatives: The platform suggests viable model changes that reduce disparity while preserving predictive power.

Pros

- SolasAI embeds legal and regulatory expertise into its algorithms.

- It integrates with existing model pipelines. You don’t have to rebuild models.

- It helps teams generate disparity reports to support audits and align with the current ethics and regulatory guidance.

Cons

- SolasAI focuses exclusively on bias and fairness. You’d need other tools for model registry, observability, or access policy enforcement.

- It serves well in compliance-heavy domains. General-purpose ML/GenAI teams may find its scope too narrow.

Pricing

SolasAI uses a quote-based pricing model. The company does not list pricing tiers publicly. You must contact the sales team.

Bottom line

If your priority is bias detection and mitigation, SolasAI offers a practical, expert-vetted solution. It excels at identifying algorithmic disparity, explaining its causes, and suggesting corrective model variants.

8. Censius

What it does: Censius automates model observability via SDKs or APIs. Once you install it, you can register models, set up monitors, and visualize drift for LLMs.

Who it's for: Small to mid-sized data teams or startups with ML models in production who need actionable insights into deployed models without a heavy setup.

Key features

- Automated monitors and alerts: Censius auto-initializes monitors for data drift, data quality, model performance, and bias when you connect a model. The platform tracks drift for every feature automatically and routes alerts to Slack, email, or other channels.

- Explainability drill-downs: Censius surfaces global, local, and cohort-level attribution insights that pinpoint why models mispredicted.

- Model version comparison & analytics: Teams can compare model variants side-by-side, evaluate ROI impact, and build custom dashboards that draw from prediction logs and traffic metadata.

Pros

- Teams integrate via code and get real observability in hours.

- Censius combines embedding and LLM monitoring alongside tabular bias detection.

- Both technical and non‑technical stakeholders can self-serve insights on model behavior and fairness.

Cons

- Censius does not enforce governance workflows like policy approvals or risk-based intake systems.

- It doesn’t serve as a model registry. You need other tools to track model lineage or manage deployments.

Pricing

Censius offers a freemium trial. After the trial, pricing is usage-based and custom. You’ll pay based on model volume, data ingestion, and tracked features. The starter plan supports up to 5 models, 500k predictions/model, 500 features, and 3 months of data retention.

Bottom line

Choose Censius if you need code-first observability for ML or LLM models in production. It excels at identifying and troubleshooting anomalies across model decisions in real time.

9. Holistic AI

What it does: Holistic AI tracks AI systems across your organization. It scores them for legal and ethical risk, and enforces controls based on real-world regulations like the EU AI Act, NYC bias audit law, and NIST guidelines.

Who it's for: Enterprises with significant AI deployments and regulatory exposure, especially those preparing for AI regulations (EU AI Act, NYC bias audit law).

Key features

- AI system inventory: Holistic AI scans and registers all internal and vendor-owned AI systems. It tags them with use case, owner, risk category, and data type.

- Automated risk assessments: The platform walks teams through structured risk reviews based on live regulatory frameworks, then generates audit-ready documentation.

- Policy enforcement workflows: You can set guardrails by system type. For example, you can require fairness audits for high-risk tools or flag unauthorized biometric use.

Pros

- It aligns governance with actual regulations, not just internal best practices.

- It gives enterprises a system-level view of AI use, including shadow AI and third-party tools.

- It supports non-technical users with prebuilt compliance workflows and templates.

Cons

- It demands extensive information gathering, cross-team collaboration, and potentially new processes. Success depends on the company's willingness to adopt a governance culture.

- It is a high-level risk management rather than live monitoring of model drift or accuracy.

Pricing

Holistic offers a custom enterprise subscription. It has no public pricing.

Bottom line

Holistic AI is the best fit for organizations that treat AI governance as a compliance function. It works well for regulated industries that need to assess risk across AI systems, not just monitor model behavior.

10. Arize AI

What it does: Arize AI gives teams full-lifecycle visibility into how AI models and agents behave. It covers prompt engineering, offline evaluation and real-time monitoring in production.

Who it's for: AI/ML engineers and platform teams running LLMs or agent-based systems who want to trace, debug, and improve AI performance quickly.

Key Features

- Prompt and agent tracing: Arize logs every step of a model or agent’s execution, including inputs, outputs, and intermediate reasoning. It integrates with open standards like OpenTelemetry for tracing and logging.

- Automated GenAI evaluation: You can test prompts and agents before deployment using side-by-side comparisons, regression testing, or LLM-based evaluators for hallucination, relevance, and bias.

- Live model monitoring: Arize tracks drift, latency, and quality metrics in production for both structured and unstructured tasks. It surfaces anomalies as they happen.

Pros

- Arize combines prompt experimentation, evaluation, and production monitoring into a single tool.

- You can trace model behavior with open standards and use the open-source Phoenix project to avoid lock-in.

- It’s one of the few platforms built natively for LLMs and multi-agent frameworks, not just classic ML models.

Cons

- Arize does not enforce policy or governance workflows like RBAC or compliance intake.

- You have to set up instrumentation SDKs or OpenTelemetry, which may take engineering effort upfront.

Pricing

The enterprise plan is custom. It supports unlimited users, custom storage, and billions+ of trace spans. The paid plan starts at $50/month/workspace with quotas on spans and storage. The free tier supports 1 user and up to 1M trace spans per 14 days. The open-source version, Phoenix OSS, is free for tracing and evaluation.

Bottom line

Arize is designed for debugging and improving LLMs or agents in a traceable way.

How we evaluated these AI governance platforms

We researched 10 platforms to find the best AI governance software.

Here's what we looked for:

Policy enforcement

We checked if platforms enforce organizational AI policies automatically. Good platforms include access controls that limit who can deploy models, audit trails that track all model activity, and automated compliance checks. Policy enforcement prevents unauthorized AI usage and keeps organizations compliant with regulations.

AI + app integration

We tested how easily platforms connect to existing AI application stacks. This means checking support for cloud platforms, programming languages, and model formats. The best platforms integrate easily with tools like Snowflake or trigger actions via API without requiring major engineering changes.

Monitoring and observability

We evaluated each platform's ability to monitor models and apps in real time. Strong monitoring catches issues early and prevents AI failures from impacting business operations.

Additional factors we considered:

- Scalability: Can the platform handle increasing data and model loads as your organization grows?

- Enterprise security: Does it support SSO/SAML integration, role-based access control, data encryption, and compliance certifications?

- Ease of adoption: How steep is the learning curve? Can teams start using it quickly without extensive training?

- Customization options: How much can you tailor the platform's behavior to your specific needs?

Which AI governance platform should you choose?

The right platform depends on your specific needs and use cases. After testing all these tools, we found that each platform excels in different areas.

If we frame it broadly:

- Superblocks is great if your focus is on rapidly building internal AI-powered applications with governance baked in at the app level.

- IBM OpenScale and Azure ML are strong choices if you’re an enterprise already invested in those respective ecosystems (IBM or Microsoft).

- Credo AI and Holistic AI stand out if your priority is comprehensive risk management and compliance. They help set policies and check models against those systematically.

- Fiddler, Arize, Solas, and Censius all offer monitoring and explainability. Among them, Censius provides a more entry-level, accessible observability option.

- DataRobot is best if you want an all-in-one solution from model building to deployment with governance layers throughout.

My final verdict

The best AI governance platform is the one that aligns most closely with your organization’s AI priorities.

Superblocks gives you a governable foundation for operational AI. You can enforce access policies, route GenAI usage through approvals, log every interaction, and isolate data in secure environments, all from within the same platform where your apps run.

Choose Superblocks if:

✅ Need to monitor and govern LLM usage across internal apps and process automations.

✅ Have a backlog of internal tools and need to empower non-technical teams safely

✅ Need fast development without compromising compliance

✅ Want to prevent shadow AI by centralizing development

❌ Choose alternatives if you need ML model monitoring. That’s not its focus.

Build governed and secure AI apps with Superblocks

Superblocks gives enterprises a secure, centrally governed platform to build internal applications powered by AI. IT, business, and engineering teams can build apps using AI prompts, code, or a visual editor while maintaining enterprise security and governance standards.

Here’s a recap of the key features that make this possible:

- Flexible development modalities: Let teams build using code, GenAI, or a full WYSIWYG visual editor, all synced in real time.

- Centralized governance: Control access, enforce security policies, and track usage across apps with enterprise-grade permissioning.

- AI app generation with guardrails: Set custom prompts, apply sanitization rules, and enforce design systems with an extensible LLM.

- Integrations with your SDLC: Connect to any API, database, Git provider, CI/CD pipeline, or observability tooling.

- Expert implementation support: Forward-deployed engineers help you launch governed apps faster, securely, and at scale.

If you’re solving for engineering bottlenecks, shadow IT, or AI sprawl, Superblocks gives you a single pane of glass to bring AI into your development toolkit. Schedule a demo with one of our product experts to get started.

Frequently Asked Questions

What is an AI governance platform?

An AI governance platform is software that helps you manage AI use across your organization. It supports oversight, compliance, and accountability. It covers access controls, audit logs, fairness checks, and policy enforcement.

What should I look for in AI governance tools?

You should look for features that align with your compliance and control goals. At a minimum, check for:

- Monitoring and alerts for model behavior

- Explainability tools to understand decisions

- Role-based access and approval workflows

- Integration with your dev and compliance stack

- Usability across both tech and semi-technical teams

What’s the difference between governance and explainability?

Explainability is a component of governance. It refers to techniques and tools that help you understand how a model makes decisions. Governance encompasses the policies, processes, and oversight mechanisms around AI.

How does Superblocks enforce AI governance?

Superblocks enforces governance by controlling access, permissions, and audit logs at every step of the app lifecycle. It applies RBAC, SSO, approval workflows, and security policies across all AI-built apps.

Can I use Superblocks for model monitoring?

No, Superblocks does not monitor model performance. It governs how teams use AI inside internal apps. If you need performance metrics, use tools like Arize or Fiddler.

Which tools are best for enterprise security and audit?

Superblocks offers audit trails for user actions and supports enterprise security features like SSO, SCIM, granular access controls, and secrets management. You can also keep your sensitive data in your network.

What’s the most flexible platform on this list?

Superblocks offers the most flexibility for building apps. Teams can use AI, code, or a visual editor to ship tools. Arize offers the most flexibility for model monitoring. It supports any model, cloud, or data type.

Stay tuned for updates

Get the latest Superblocks news and internal tooling market insights.

Request early access

Step 1 of 2

Request early access

Step 2 of 2

You’ve been added to the waitlist!

Book a demo to skip the waitlist

Thank you for your interest!

A member of our team will be in touch soon to schedule a demo.

Table of Contents

.png)

.png)

%20(1).png)